Pilates instructors know a dirty secret: most people would rather skip class than risk looking uncoordinated in public.

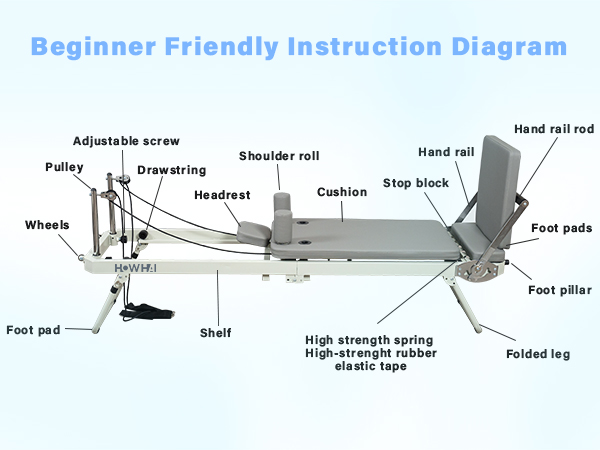

The reformer pilates machine looks like a medieval torture device - all springs, pulleys, and moving parts that can snap back and hurt you if you don't know what you're doing.

So when my local studio owner said, "New people see everyone else looking graceful and get intimidated," I understood completely. Nobody wants to flail around on that contraption while everyone watches.

As someone who has skipped class for exactly this reason, I can confirm: we'll find every excuse to avoid the thing we actually need most - practice.

Turns out, AI adoption works exactly the same way.

Teams know they should be using AI tools. They've attended the training. They understand the benefits. But when it comes to actually using them in high-stakes situations? They find ways to stick with what they know.

The problem isn't the technology - it's that we're asking people to perform without practice.

When McKinsey accidentally tells the truth

If both a pilates teacher and the world’s most infamous consulting firm are identifying the same problem, you know it's real.

McKinsey just dropped a study that reveals what they call the "gen AI paradox": nearly eight in ten companies have deployed AI, but roughly the same percentage report no material impact on earnings.

The culprit? An imbalance between basic implementations (employee copilots, chatbots) that scale easily but deliver "diffuse benefits," and transformative applications that "seldom make it out of the pilot phase because of technical, organizational, data, and cultural barriers."

Ok, McKinsey charges $50,000 a week to fix problems like this, so take their framing with a grain of salt. But the underlying reality? That's real.

Walk into any company right now and you'll find the same story: AI pilots everywhere, transformation nowhere.

Here's what's actually happening. Too many companies treat AI like office software - roll it out, do some training, hope for the best. Meanwhile, most AI applications never escape pilot purgatory.

Why? Because the moment AI meets real human dynamics, everything falls apart.

The moment it all goes sideways

Last month, a senior director at a global consulting firm called us with a familiar problem.

She’d been tasked with rolling out a custom AI tool to hundreds of team members. The tool was solid - built on their proprietary data, designed for their specific workflows. But adoption was stuck.

"We did the training," she explained. "Live sessions, breakouts, use case presentations. People nodded along, asked good questions. But when it comes to actually using it with clients?" She paused. "They quietly revert to the old playbook."

The issue wasn't the technology. It was confidence. Teams knew how to use the tool in controlled settings, but the moment client pressure entered the equation, they reverted to what felt safe.

This pattern is everywhere. And it's getting worse.

This is where the World Economic Forum's warning hits home:

"Skill decay isn't slowing down, but it is getting much harder to see."

Your team looks capable until they're not. And by then, it's too late.

The gap isn't technical knowledge. It's the judgment to navigate moments when AI intersects with human skepticism, organizational politics, and high-stakes decisions.

We're asking people to perform without practice. Then we're surprised when they freeze, ditch the tools, or don’t even engage with them at all.

So what's causing this confidence collapse?

Why your AI training is making things worse

Here's the dirty secret nobody talks about: most AI training creates overconfident beginners who crumble the moment things get messy.

Think about how this usually goes. Your team attends a workshop called "AI for Business Leaders" or "Prompt Engineering Bootcamp." They learn the features. They practice on clean examples. They leave feeling ready to change the world.

Two weeks later, reality delivers a cold slap.

When the unexpected happens - when results don't match expectations, when stakeholders ask probing questions, when the perfect demo environment meets messy reality - the confidence evaporates.

The problem isn't the people or the technology. It's that we're training for success, not for struggle. And learning? Learning is struggle. Or rather, it's wicked.

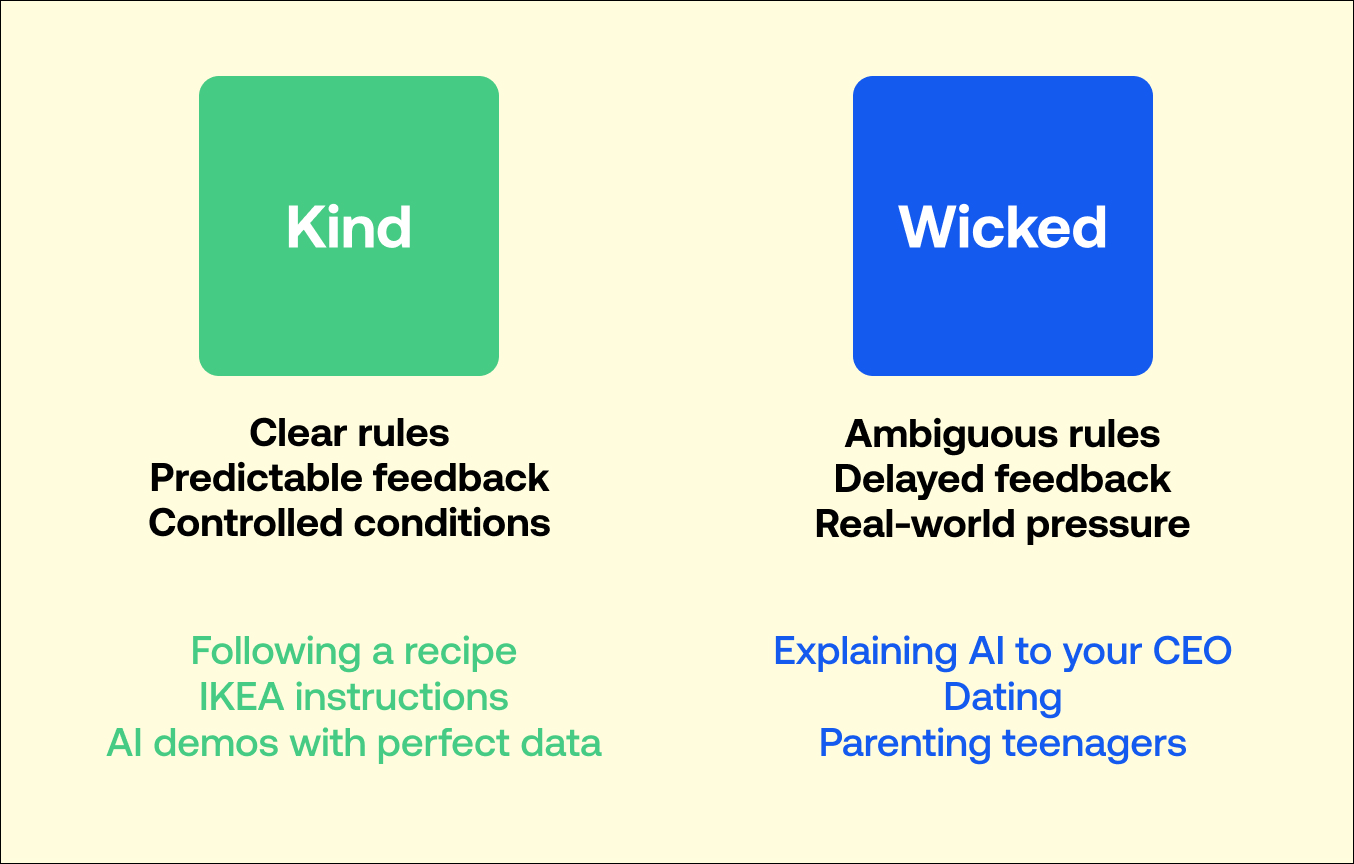

Kind vs. Wicked

The problem runs deeper than bad training design. It's rooted in a fundamental misunderstanding of how humans actually build capability.

Learning scientists have known this for decades. There's a massive difference between "kind" learning environments (clear rules, predictable feedback) and "wicked" ones (ambiguous rules, delayed feedback, shifting contexts).

AI tools work fine in kind environments - clean data, clear prompts, predictable outputs.

But business decisions? That's wicked territory. Competing priorities, incomplete information, stakeholders with hidden agendas.

We're training people in clean, predictable environments then expecting them to perform in messy, unpredictable ones.

It's like teaching someone to drive in an empty parking lot then throwing them into Manhattan traffic (ok, I just gave myself PTSD about my first day driving in the East Village…).

You don’t train chefs with cookbooks

Dr. Lia DiBello's research with over 7,000 participants shows that scenario-based training accelerates learning by several months compared to traditional methods. The difference? Practice under pressure.

You don't train great chefs with cookbooks - you put them in the kitchen.

Elite surgeons rehearse procedures until they become instinctive. Fighter pilots practice split-second decisions in simulators that feel real.

But business professionals? We give them frameworks and hope for the best.

When you think about it, this approach is completely backwards.

What nobody admits about AI adoption

The real reason AI initiatives stall? The people implementing them feel like frauds.

Deep down, they're terrified someone's going to ask a question they can't answer. So they hedge. They qualify every AI-generated insight with disclaimers. They present recommendations tentatively, ready to backtrack at the first sign of pushback.

This isn't incompetence. It's human nature. We avoid using anything we don't feel confident with, especially when the stakes feel high.

The WEF puts it perfectly:

"We're going to find ourselves with a workforce that looks effective and capable until catastrophe strikes. And that's a terrible time to discover you don't have the resources required to put out the fire."

It’s not about incompetence. It’s about confidence.

The confidence ladder most companies never climb

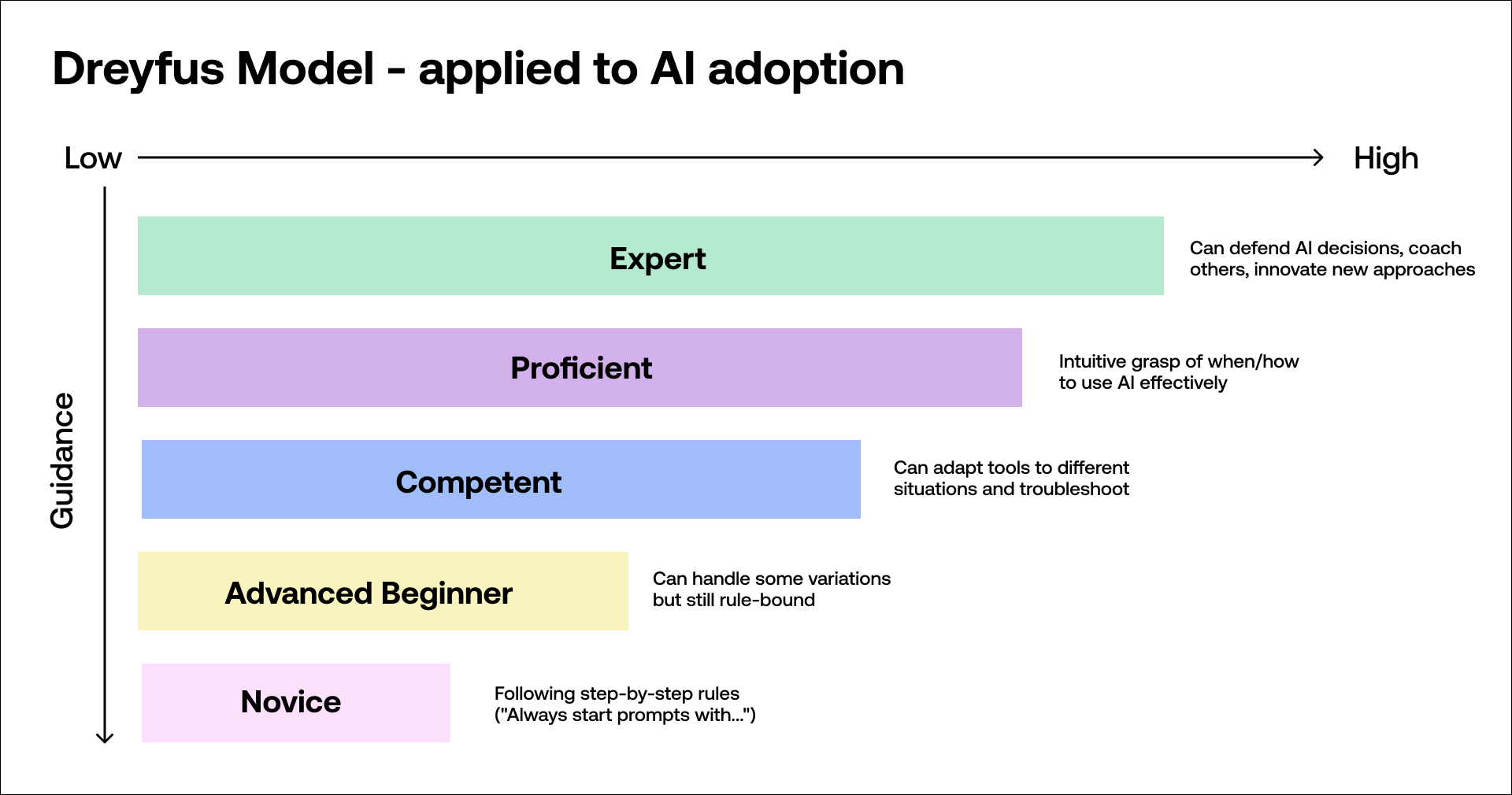

Ok, what actually builds confidence with a new capability?

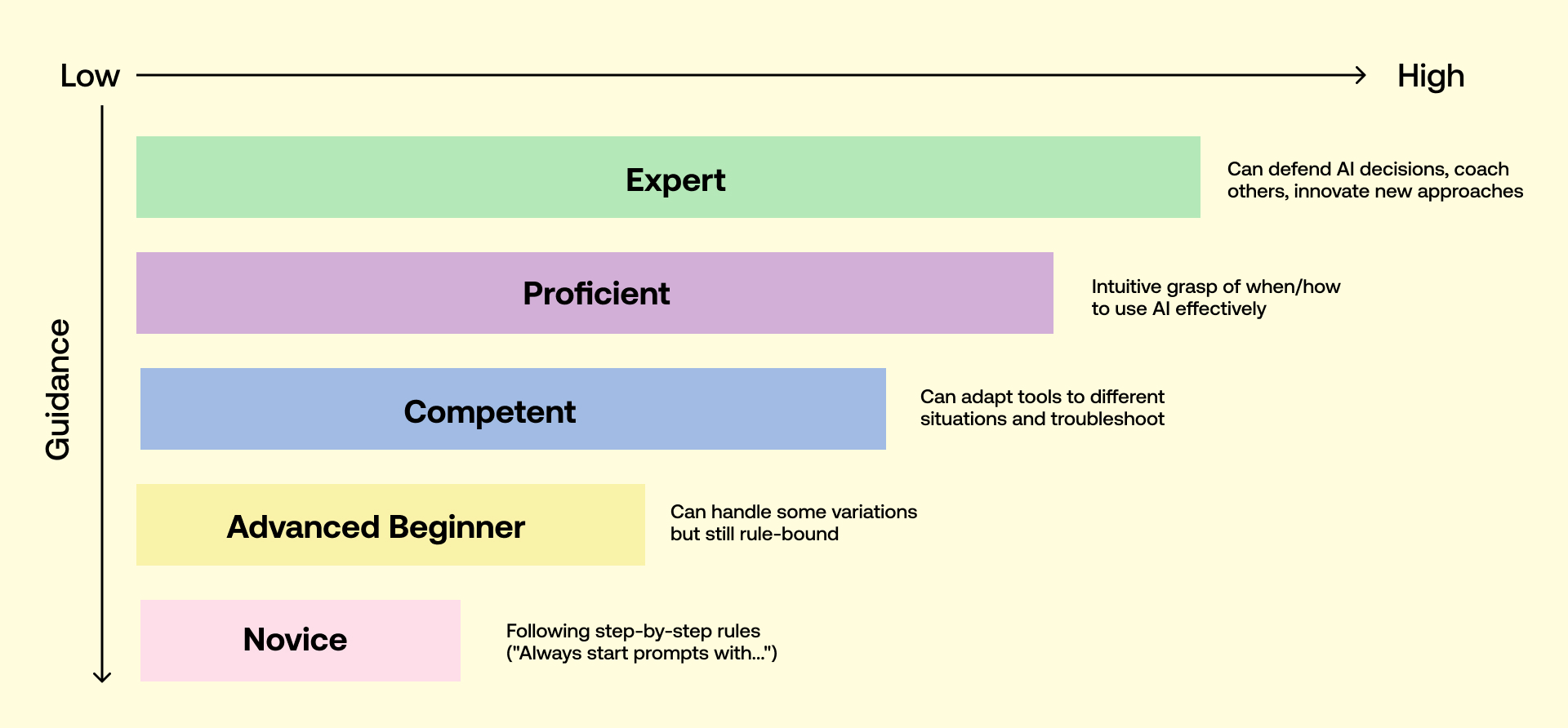

Cognitive scientists call it the Dreyfus model - five stages from novice to expert. This is how it maps to AI adoption:

The problem? Most companies stop at stage two and wonder why people freeze up when stage five moments arrive.

But authority - the ability to stand behind AI-enhanced decisions when questioned - requires something else entirely. It requires having been tested and not broken.

You can't build authority from documentation.

You build it by surviving challenges that feel real but aren't fatal.

Where this gets practical

Companies succeeding with AI aren't just training differently - they're creating environments where the messy stuff can happen safely.

Instead of theoretical workshops, they run scenarios where people practice explaining AI recommendations to skeptical clients. Instead of feature demos, they simulate what happens when AI tools crash mid-presentation.

They're borrowing from industries that get it: medicine, aviation, even elite kitchens where chefs practice knife skills until muscle memory takes over.

The surgeon doesn't just study anatomy - they practice procedures until steady hands become instinctive. The pilot doesn't just memorize systems - they train in simulators until split-second decisions feel automatic.

Your team needs the same thing. Not more information about workflow automations - but confidence that comes from practicing the moments that matter.

Because when someone challenges your AI-driven strategy in front of the board, you don't want to be learning on the fly.

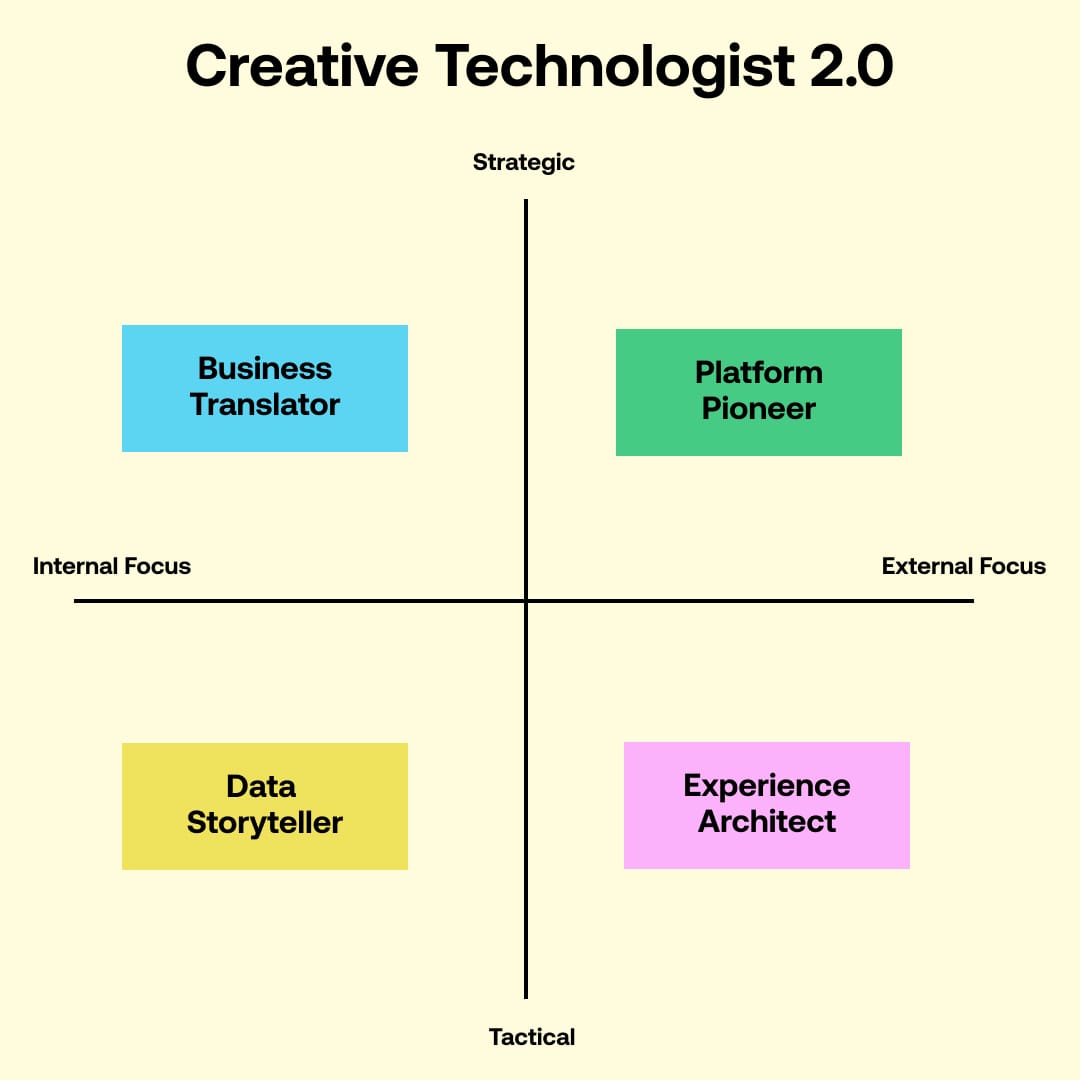

The missing role that bridges the gap

Most AI initiatives are led by people who can talk beautifully about possibilities but can't show you how it works for your specific context.

You get consultants who recently pivoted from "digital transformation" to AI, or technical experts who can't make their knowledge accessible.

The companies succeeding with AI work with a different breed: creative technologists who exist in that space between strategy and execution.

These aren't traditional consultants or pure technologists - they translate between business problems and technical solutions.

More importantly, they understand that confidence isn't built through PowerPoint - it's built through practice.

At Wavetable, we've codified this into what we call 'Fast Break' - strategic rehearsal for AI adoption that provides environments where safe failure becomes useful learning.

Because when someone challenges your AI-driven strategy in front of the board, you don't want to be learning on the job.

The choice every leader faces

You have two options.

Option 1: Keep doing what everyone else does.

Roll out AI tools, provide some training, hope your team figures it out when the pressure's on. Join the 80% of companies using AI with nothing to show for it.

Option 2: Build confidence before you need it.

Create practice spaces where your team can rehearse the hard conversations, simulate tool failures, practice defending AI-driven decisions to skeptics.

The WEF reminds us that "success isn't found in acknowledgment. It comes from action." But action without preparation isn't strategy - it's hope.

The companies winning with AI aren't implementing better technology. They're building teams who've practiced the moments that matter most.

Teams who can adopt new professional identities when AI changes their role. Who punch above their weight in client presentations. Who reduce build costs dramatically by knowing exactly when AI adds value and when it doesn't.

Your team will face those high-stakes moments eventually. The only question is whether you'll prepare them now or discover their limits when a client is watching.

The choice is yours. But the clock is ticking - your competitors are already practicing.

Ready to start building confidence before you need it? Let's talk >

.svg)